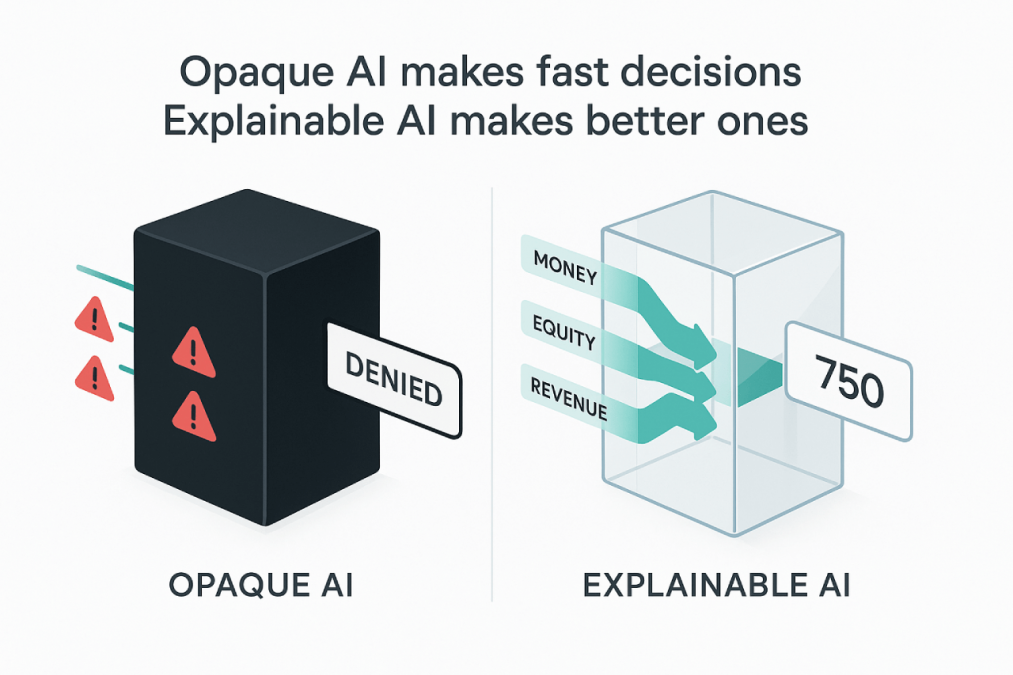

Opaque algorithms make fast decisions. Explainable AI makes better ones.

The Problem With Black-Box AI in Finance

Finance thrives on trust. Investors, regulators, and customers all demand to understand risk.

Yet much of today’s AI operates like a sealed vault. It predicts, ranks, and denies, but cannot explain its reasoning.

That might be tolerable when recommending a playlist, but not when millions of dollars and jobs are at stake.

Regulators are catching on. The European Union AI Act and the United States Securities and Exchange Commission scrutiny of automated decision systems all emphasize one word: explainability. If financial institutions cannot justify how their models reached a conclusion, they are gambling with compliance and reputation.

Why Explainability Matters

Explainable AI (XAI) allows humans to see and understand the logic behind an algorithm’s output. It’s not just about ethics, it’s about survival in an industry built on accountability.

- Trust: Stakeholders can validate results.

- Compliance: Regulators demand clarity, not blind confidence.

- Risk Management: If you can trace the cause of a bad prediction, you can fix it.

Without explainability, AI becomes a liability disguised as innovation.

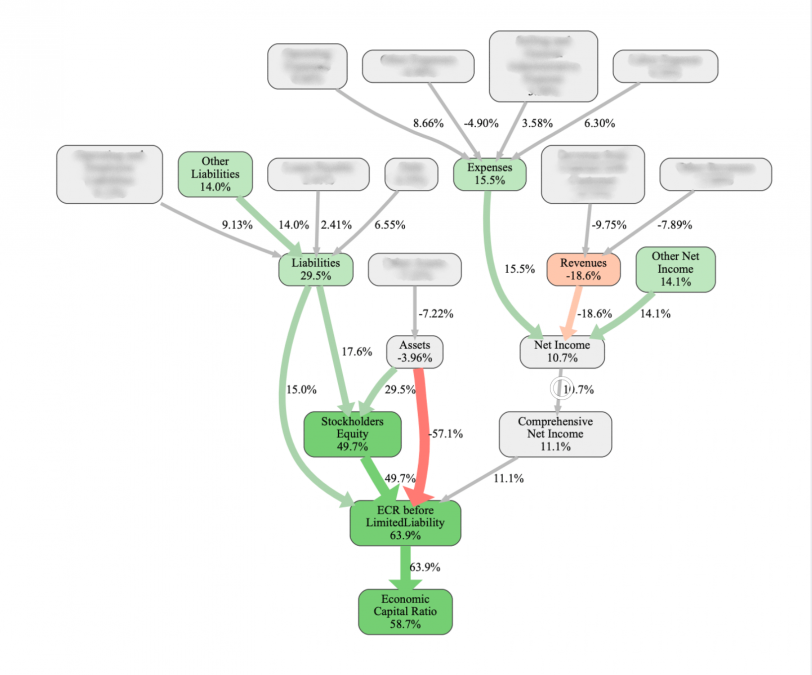

Real Example: RealRate’s Economic Capital Ratio (ECR)

At RealRate explainability is the foundation of our artificial intelligence financial ratings.

See it for yourself

Example:

CNL Strategic Capital LLC is ranked number one of 49 in the U.S. Finance Services category with an Economic Capital Ratio of 148 percent.

Strong stockholders equity significantly boosts performance, while revenue challenges reduce overall strength.

With RealRate you can see exactly which financial drivers help or hurt a company. No guessing.

Key Drivers

✔ Stockholders Equity contributes +49.7 percentage points

❌ Revenues reduce performance by −18.6 percentage points

The Cost of Ignoring Explainability

Firms that chase predictive accuracy at the expense of clarity are setting themselves up for failure. Black-box systems may outperform in the short term, but when results contradict intuition or law, nobody can defend them.

We’ve seen examples across banking, insurance, and asset management: models that mispriced risk, denied fair credit, or over-valued portfolios and nobody could explain why.

When trust breaks, AI adoption collapses.

The Future: Transparent Intelligence

The future of financial AI isn’t more complex, it’s more transparent.

Explainability is how AI earns its social license to operate.

It’s the difference between “the model said so” and “here’s the reasoning.”

RealRate’s explainable approach proves that financial AI can be both powerful and accountable.

By revealing how every factor shapes a company’s rating, we’re redefining fairness and setting

Want to see explainable AI in action?

Explore the latest RealRate Rankings 2025 → [Link]

Or Learn how RealRate’s Explainable AI redefines financial transparency → [Link].